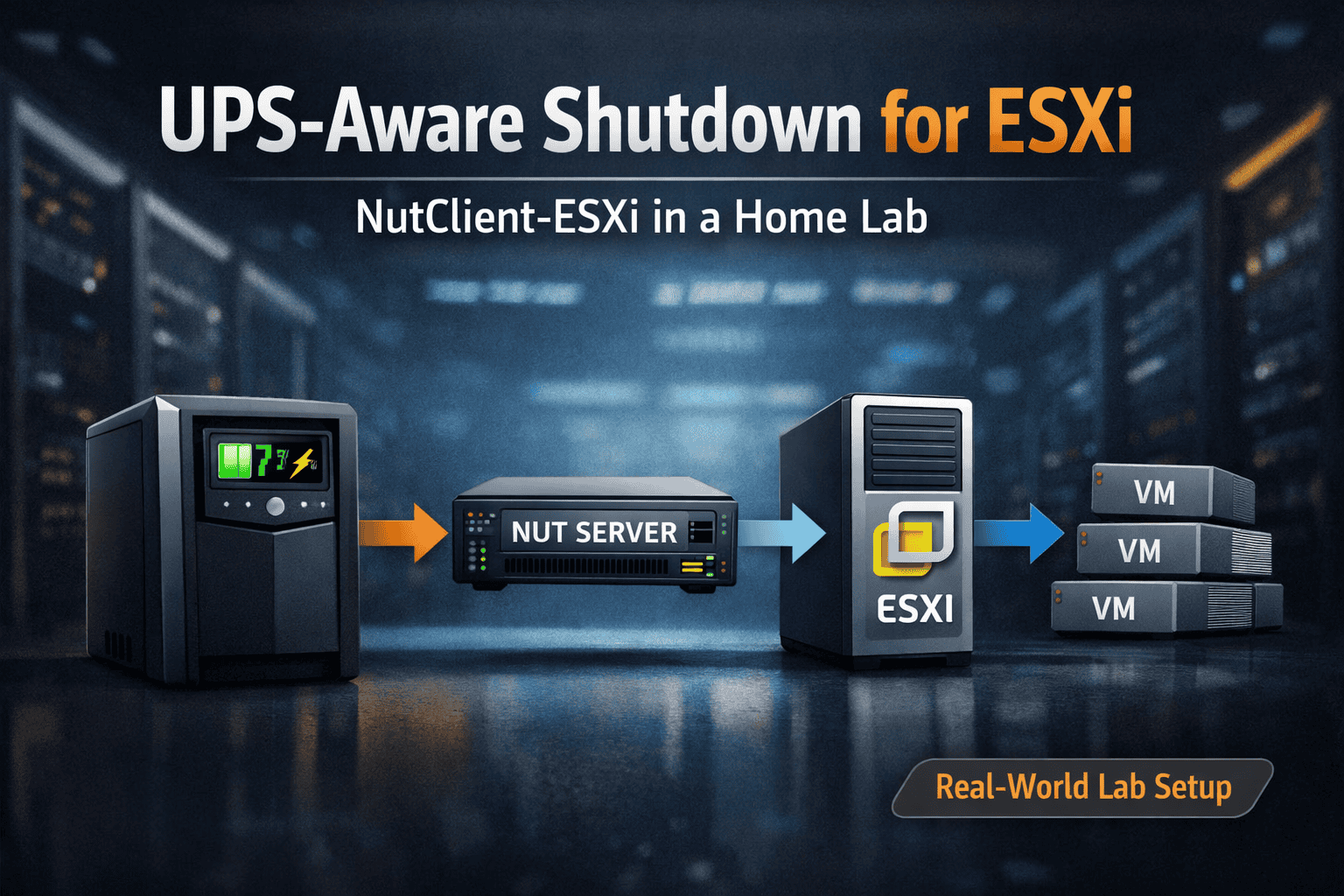

My home lab is no longer a playground for experiments. It has evolved into a stable, reproducible, and deliberately designed infrastructure, closely aligned with real-world operational requirements.

One topic that is often underestimated – even in professional environments – is power stability and failure handling. While a UPS protects hardware from sudden power loss, it does not automatically protect data integrity or system consistency.

This gap is exactly where NutClient-ESXi comes in.

GitHub – rgc2000/NutClient-ESXi: Network UPS Tools client for VMware ESXi

🔌 Why ESXi Needs UPS-Aware Shutdown Logic

Out of the box, ESXi has no native mechanism to react intelligently to UPS events. Without additional tooling, the hypervisor will continue running until power is gone -resulting in a hard stop.

NutClient-ESXi acts as a Network UPS Tools (NUT) client for ESXi, allowing the host to react to UPS status information provided by a central NUT server.

When the UPS reaches a critical battery level:

- ESXi receives the shutdown signal

- Virtual machines are shut down gracefully (VMware Tools required)

- The ESXi host powers off cleanly

This controlled sequence is essential for environments running stateful or time-sensitive workloads.

⚡ Short Power Events vs. Real Outages

In practice, a UPS is triggered far more often by short power interruptions or grid frequency fluctuations than by full blackouts.

In most cases:

- Power returns within seconds

- The UPS absorbs the instability

- No shutdown is required

This is normal and expected behavior.

However, recent large-scale incidents – such as extended power outages in Berlin -have shown that power does not always return within the UPS battery window.

When that happens:

- Battery capacity is exhausted

- Systems are forced into an uncontrolled power-off

- Data integrity is at risk

A UPS only buys time.

What you do with that time determines the outcome.

🔐 Why Controlled Shutdown Protects Data Integrity

A controlled shutdown ensures:

- Filesystem buffers are flushed

- Databases close cleanly

- VM disks and snapshots remain consistent

- Services restart in a known-good state

Without this, systems may require recovery, rollback, or manual repair.

For infrastructure that manages real-world processes, this risk is unacceptable.

🧩 My Home Lab Setup (High-Level Overview)

The lab is built around a three node ESXi cluster running several core services:

- Directory and VPN services

- ioBroker for solar battery energy flow control

- File and backup services

- Monitoring and management VMs

- changing test environments for my professional role and for evaluating new technologies

The UPS itself is not connected directly to the ESXi host. Instead, it is attached to a separate system acting as a NUT server. NutClient-ESXi bridges this gap and enables ESXi to react to UPS events over the network.

⚠️ A Critical Look at Host acceptance level

Installing NutClient-ESXi requires lowering the ESXi acceptance level:

esxcli software acceptance set --level=CommunitySupportedWhile this enables the installation, it is not a harmless configuration change and must be treated with caution.

Lowering the acceptance level means that ESXi will:

- Accept unsigned and community-built VIBs

- Load components that are not validated or certified by VMware

- Potentially lose or limit vendor support eligibility

- Expose the host to a larger attack surface

From a security and operations perspective, this effectively opens the door to unverified code running at the hypervisor level.

🚫 Production Environments: A Clear No

This setting has no place in production environments.

Lowering the acceptance level in production:

- Bypasses established trust and validation chains

- Makes it difficult to assess the integrity of installed components

- Creates an entry point for malicious or compromised VIBs

- Undermines security baselines and compliance requirements

In regulated or customer-facing environments, this would be unacceptable and indefensible.

🧪 Controlled Use in a Home Lab

In a home lab, this setting can be acceptable – but only when applied deliberately and transparently.

Best practices for home labs:

- Lower the acceptance level only when absolutely required

- Install well-known, actively maintained, and widely used projects

- Document the change and the technical rationale behind it

- Regularly review installed VIBs and remove unused components

In short:

CommunitySupportedis a conscious trade-off, not a convenience setting.

Used carefully, it enables learning and experimentation.

Used carelessly, it opens the hypervisor to unverified and potentially harmful code.

📦 Installing NutClient-ESXi via vLCM Single Image

When managing ESXi hosts through Lifecycle Manager (vLCM) using a Single Image, NutClient-ESXi should be deployed as part of the cluster image – not installed manually on individual hosts.

High-Level Steps

- Import the offline bundle

- vCenter → Lifecycle Manager → Import Updates

- Upload the NutClient-ESXi offline bundle ZIP

- Add the component to the cluster image

- Cluster → Updates → Image

- Add NutClient-ESXi under Components

- Validate and remediate

- Review acceptance level warnings

- Remediate the cluster to apply the updated image

After remediation, all hosts are image-compliant and NutClient-ESXi is installed consistently.

Important Notes

- Requires

CommunitySupportedacceptance level - Applies to all hosts in the cluster

- Removal is done by deleting the component from the image and re-remediating

Using vLCM keeps the setup consistent, auditable, and reproducible, even when working with community components.

🔧 NutClient-ESXi Configuration via Simple Bash Script

For my lab, I deliberately keep the NutClient configuration simple, transparent, and close to ESXi internals. Instead of additional automation layers, I configure the required Advanced Settings directly on the host using esxcli. This mirrors what ESXi itself uses and avoids API or module limitations.

The script below is executed directly on the ESXi host (or via SSH as root) after the NutClient-ESXi VIB is installed.

configure-nutclient-esxi.sh:

#!/bin/sh

#

# Simple NutClient-ESXi configuration script

# Run directly on the ESXi host (or via SSH as root)

#

# ===== CONFIGURATION =====

# Name of the UPS as defined on the NUT server.

# Format: ups_name@nut-server-hostname

# This tells ESXi which UPS to monitor via the NUT server.

NUT_UPS_NAME="ups1@nut-server.lab.local"

# Username used by ESXi to authenticate against the NUT server.

# If multiple NUT servers are configured, all must accept the same credentials.

NUT_USER="nutuser"

# Password for the NUT user.

# !!Stored in plaintext so make sure the user is reay-only!!

NUT_PASSWORD="nutpassword"

# Number of seconds ESXi waits AFTER receiving a low-battery event

# before initiating the shutdown sequence.

# Useful to allow final state updates or short recovery windows.

NUT_FINAL_DELAY=60

# Number of seconds ESXi is allowed to run on battery power

# BEFORE initiating shutdown.

# Set to 0 to disable and wait only for the low-battery event.

NUT_ON_BATTERY_DELAY=300

# Minimum number of power supplies required to keep the host running.

# If fewer supplies are detected, ESXi will initiate shutdown.

# Typical value for single-PSU systems: 1

NUT_MIN_SUPPLIES=1

# Controls email notifications for UPS events:

# 0 = no email

# 1 = basic notification

# 2 = detailed notification including full UPS status

NUT_SEND_MAIL=0

# Destination email address for UPS event notifications.

# Only used if NUT_SEND_MAIL is set to 1 or 2.

NUT_MAIL_TO="admin@lab.local"

# Optional SMTP relay used to send notification emails.

# Leave empty ("") to disable SMTP relay usage.

NUT_SMTP_RELAY=""

# ===== APPLY SETTINGS =====

echo "Configuring NutClient-ESXi advanced settings..."

esxcli system settings advanced set \

-o /UserVars/NutUpsName \

-s "${NUT_UPS_NAME}"

esxcli system settings advanced set \

-o /UserVars/NutUser \

-s "${NUT_USER}"

esxcli system settings advanced set \

-o /UserVars/NutPassword \

-s "${NUT_PASSWORD}"

esxcli system settings advanced set \

-o /UserVars/NutMailTo \

-s "${NUT_MAIL_TO}"

esxcli system settings advanced set \

-o /UserVars/NutSmtpRelay \

-s "${NUT_SMTP_RELAY}"

esxcli system settings advanced set \

-o /UserVars/NutFinalDelay \

-i "${NUT_FINAL_DELAY}"

esxcli system settings advanced set \

-o /UserVars/NutOnBatteryDelay \

-i "${NUT_ON_BATTERY_DELAY}"

esxcli system settings advanced set \

-o /UserVars/NutMinSupplies \

-i "${NUT_MIN_SUPPLIES}"

esxcli system settings advanced set \

-o /UserVars/NutSendMail \

-i "${NUT_SEND_MAIL}"

echo "NutClient-ESXi configuration completed."

Notes:

- Changes take effect immediately; no reboot required.

- Ensure the NutClient service is set to Start and stop with host.

🔍 Verifying Connectivity to the NUT Server

After configuring the settings, it’s important to confirm that ESXi can actually reach and query the NUT server. NutClient ships with the standard NUT tools, including upsc.

Run the following command on the ESXi host:

/opt/nut/bin/upsc ups1@nut-server.lab.local

What to Expect

- You should see a list of UPS variables (e.g.

battery.charge,ups.status,input.voltage). - This confirms:

- Network connectivity to the NUT server

- Correct UPS name (

ups1) - Valid credentials

- Functional NUT protocol communication

If this command fails:

- Recheck

UserVars.NutUpsName, username, and password - Verify firewall rules between ESXi and the NUT server

- Confirm the UPS name on the NUT server (

upsmon -c fsd/upsmon -lon the server side)

🧠 Why This Matters

A successful upsc query means the control path is complete:

UPS → NUT Server → ESXi → NutClient.

Only with this verified does a controlled, UPS-aware shutdown become reliable when the battery runtime is exhausted.

This keeps the lab predictable, debuggable, and aligned with real-world operations—exactly what I want from a setup that doubles as a professional test environment.

✅ Conclusion

NutClient-ESXi fills a real gap in ESXi environments by enabling UPS-aware, automated shutdowns for hosts and virtual machines.

Short outages are common.

Long outages are rare – but real.

Controlled shutdowns protect data integrity when power does not come back.

Schreibe einen Kommentar